Introduction: Google deepens its AI creativity push

Google has steadily evolved its AI suite beyond text generation, aiming to make Gemini a multimodal hub for creativity. In its latest move, the company unveiled “Nano Banana”, an experimental image editing model built into the Gemini app. This addition signals Google’s ambition to compete not just with chat assistants like OpenAI’s ChatGPT, but also with design platforms like Adobe Photoshop and AI-native rivals such as MidJourney and Stability AI.

Nano Banana is more than just a novelty name; it represents Google’s attempt to democratize professional-grade image editing while embedding responsible AI safeguards.

What is Nano Banana?

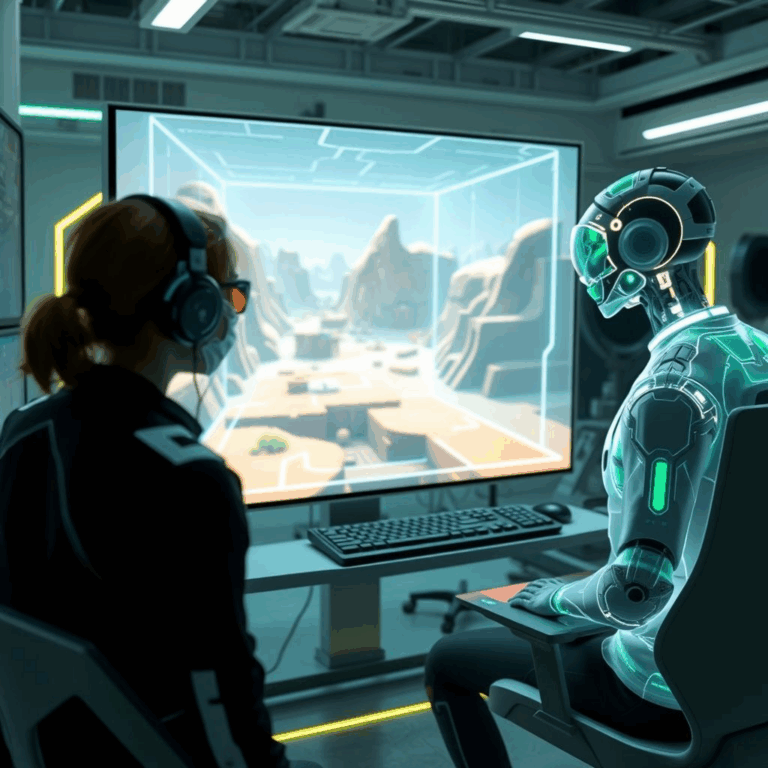

At its core, Nano Banana is a generative image editing system that allows users to transform visuals with a mix of natural-language prompts and intuitive controls. Unlike standalone editors, Nano Banana is fully integrated into Gemini, making text-to-image, image-to-image, and now in-app editing part of the same workflow.

For example, a user can upload a product photo and type: “Make the background a futuristic neon city” or “Turn this plain mug into a ceramic mug with gold accents.” With Nano Banana, these edits take seconds, not hours.

How Nano Banana elevates image workflows

- Fine-grain edits: Users can select specific regions of an image and apply targeted changes, such as swapping clothing textures or modifying facial expressions.

- Iterative refinements: The system supports multiple rounds of adjustments, allowing for rapid prototyping without starting over.

- Context-aware blending: Early demos show realistic shadowing, lighting, and perspective alignment — areas where AI often struggles.

- Cross-modal creativity: Because it lives inside Gemini, Nano Banana can combine text + image + code prompts. Designers could ask for a “web banner layout” and then tweak it visually in the same interface.

This unified experience reduces the friction between ideation and execution, a major pain point in creative industries.

Examples from Google’s launch

In its announcement, Google highlighted ten demonstration edits showcasing Nano Banana’s range:

- Turning a dog photo into a clay figurine while preserving proportions.

- Replacing plain skies with sunset gradients or cyberpunk skylines.

- Converting a business suit into casual wear with fabric-level detail.

- Adjusting object scale realistically, e.g., making a coffee cup larger without breaking table perspective.

- Seamlessly removing background clutter.

These examples echo capabilities popularized by Photoshop’s Generative Fill, but Google’s integration promises lower barriers for everyday users.

Why the creative industry is paying attention

- Designers & freelancers: Faster concept iteration saves billable hours.

- Marketing teams: Mock-ups, campaign visuals, and A/B test creatives can be generated in-house.

- SMBs (small businesses): They gain access to tools that were once locked behind costly Adobe suites.

- Content creators & influencers: Quick customization of photos and thumbnails improves branding consistency.

Industry analysts argue that Nano Banana positions Google as a serious disruptor in digital content creation, especially as generative AI moves from hobbyist spaces into commercial pipelines.

Challenges and criticisms

Despite the excitement, Nano Banana faces several hurdles:

- Copyright risks: AI-edited images could raise IP disputes if edits mimic protected styles.

- Misinformation potential: Tools that seamlessly edit photos can also generate deepfakes or misleading visuals.

- Quality vs. control: Users may still find that AI-generated edits lack the nuance of human designers, particularly for brand-specific aesthetics.

- Market competition: Adobe’s entrenched ecosystem and MidJourney’s artistic strength remain formidable.

Google’s strategy is to differentiate on accessibility and safety rather than raw artistic power.

Responsible AI safeguards

Google emphasized that Nano Banana will include:

- Content provenance metadata (to identify edited media).

- Watermarking for synthetic outputs where appropriate.

- Safety filters to block harmful or explicit manipulations.

- Guided UX nudging users toward responsible use cases.

By foregrounding ethics, Google aims to get ahead of regulatory scrutiny, particularly in Europe where synthetic media rules are tightening.

Developer and enterprise implications

Google hinted at future API access, opening possibilities for:

- E-commerce platforms auto-generating product images with varied styles.

- Publishing houses adapting illustrations at scale.

- Education tools creating interactive visuals for learning.

Enterprise adoption will hinge on governance features — audit logs, permission controls, and licensing clarity — that reassure businesses about responsible use.

Future outlook: democratizing design

Nano Banana represents a shift from expert-driven design to AI-assisted democratization. If successful, it could reduce the need for specialized software, making creativity as frictionless as typing a sentence.

But the true test lies in whether creators embrace it as a serious professional tool or dismiss it as another gimmicky AI experiment. With competition heating up, Google must prove that Nano Banana isn’t just playful branding but a game-changer in visual workflows.