CoreWeave OpenAI Deal: A New Era in AI Infrastructure

CoreWeave, the U.S.-based cloud provider specializing in GPU-accelerated infrastructure, announced a landmark $6.5 billion expansion deal with OpenAI this week. The agreement extends and deepens the partnership between the two companies, providing critical support for OpenAI’s ambitious Stargate program, which aims to scale high-performance AI compute capacity across multiple global sites.

The deal signals an ongoing evolution in AI infrastructure: companies like OpenAI are moving from off-the-shelf cloud solutions toward strategic, high-capacity partnerships with specialized providers, ensuring access to GPUs, storage, and networking resources at massive scale.

Understanding the Stargate Program

The Stargate program is OpenAI’s multi-year initiative to build next-generation AI infrastructure capable of supporting ever-larger language models and multimodal AI systems. The program focuses on:

- Massive GPU-scale deployment – Dedicated racks of Nvidia GPUs optimized for high-speed AI training and inference.

- Data center expansion – New facilities worldwide to reduce latency and increase redundancy.

- Operational resilience – Long-term, reserved capacity to maintain predictable access to hardware resources.

- Cost efficiency – Bulk contracts with GPU providers and cloud partners reduce unit costs per petaflop of compute.

By partnering with CoreWeave, OpenAI can secure predictable GPU access, ensuring that model training and deployment timelines are not disrupted by spot-market fluctuations or hardware shortages.

Deal Structure and Financial Implications

While specific contract details remain confidential, reports indicate that the CoreWeave OpenAI deal is worth up to $6.5 billion, spread across multiple years. The expansion builds on previous agreements and represents one of the largest dedicated GPU capacity contracts in AI history.

Key Aspects of the Deal:

- Multi-year commitment – The deal locks in GPU availability for OpenAI over several years, critical for ongoing model training and inferencing workloads.

- Reserved capacity – CoreWeave guarantees dedicated servers and GPU racks, enabling uninterrupted experimentation and production usage.

- Hardware coordination – Likely includes coordination with Nvidia to secure the latest AI accelerators, addressing a global shortage of high-performance GPUs.

- Financial scale – The sheer size of the contract indicates how expensive frontier AI compute infrastructure has become, with hardware, energy, and operational costs contributing significantly to the investment.

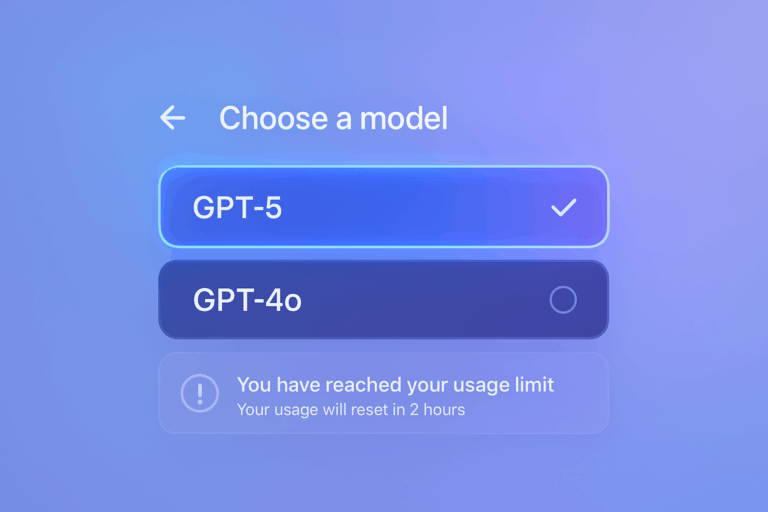

The expansion is a clear statement that OpenAI is investing heavily in infrastructure, not only to support its Claude and GPT-5 models but also to position itself competitively against Microsoft, Google, and other hyperscale providers.

Why This Deal Matters: Technical and Operational Perspectives

Predictable GPU Access

Large AI models, especially those with hundreds of billions of parameters, require massive, stable GPU capacity for both training and inference. By securing long-term, reserved access to CoreWeave’s GPU clusters, OpenAI can avoid delays caused by hardware scarcity in public clouds.

Specialized Infrastructure

CoreWeave differentiates itself from traditional cloud providers by offering GPU-optimized servers, high-speed networking, and tailored data center architecture. For AI companies, this translates into higher throughput, lower latency, and reduced operational bottlenecks.

Scalability and Redundancy

The Stargate program relies on geographically distributed facilities to reduce risk and ensure redundancy. With CoreWeave’s global footprint, OpenAI can deploy models closer to end users while maintaining the ability to scale training workloads seamlessly.

Industry Context: The AI Compute Arms Race

OpenAI is not alone in seeking massive AI infrastructure:

- Google DeepMind and Anthropic are similarly investing billions in GPU clusters.

- Microsoft has committed to multi-year contracts with Nvidia and its Azure cloud for AI workloads.

- Amazon AWS and Meta are expanding AI-specialized data centers to maintain competitive offerings.

The CoreWeave OpenAI deal illustrates a larger compute arms race where AI performance is directly tied to hardware availability. Analysts note that access to top-tier GPUs is becoming a strategic differentiator and a potential barrier to entry for smaller startups.

Strategic and Competitive Implications

For OpenAI

- Reduced operational risk – Long-term contracts mitigate supply disruptions and market volatility.

- Faster model iteration – Stable compute allows for more frequent and larger experiments.

- Enterprise credibility – Demonstrates reliability to enterprise clients relying on OpenAI-powered products.

For CoreWeave

- Revenue boost – Multi-billion-dollar contracts ensure significant cash flow over several years.

- Market positioning – Establishes CoreWeave as a leading provider of AI infrastructure for cutting-edge models.

- Partnership leverage – Increases influence with GPU suppliers like Nvidia, ensuring future hardware access.

For the AI Industry

The deal highlights several broader trends:

- Vertical integration – AI firms increasingly rely on specialized cloud providers for custom compute needs.

- Capital intensity – Infrastructure costs are now a critical factor in AI strategy.

- Vendor ecosystem consolidation – Smaller cloud providers may seek partnerships to remain competitive.

Operational Challenges and Considerations

Despite its advantages, the deal introduces operational and regulatory challenges:

- Energy consumption – Massive GPU clusters consume gigawatts of electricity, raising sustainability concerns.

- Supply chain concentration – Heavy reliance on a few specialized vendors could attract antitrust scrutiny.

- Cost management – $6.5 billion is a major capital commitment, necessitating careful financial planning to ensure ROI.

Analysts also note that as AI compute demands rise, competition for GPU supply will intensify, potentially driving hardware prices higher and creating bottlenecks for smaller players.

Expert Commentary

AI infrastructure specialists have weighed in on the CoreWeave OpenAI deal:

- Dr. Maria Chen, AI systems researcher:

“This partnership exemplifies how compute scale is becoming as critical as algorithmic innovation. OpenAI’s Stargate program can now train larger models more predictably, giving them a strategic edge.” - Tom Reynolds, cloud industry analyst:

“Deals of this magnitude set benchmarks for the entire market. Other AI startups will struggle to match the infrastructure scale without significant capital.” - Lila Ahmed, sustainable tech advisor:

“While these expansions are technically impressive, they highlight the growing need for sustainable AI operations. Power efficiency and renewable sourcing must be part of the conversation.”

Financial and Market Implications

From a financial perspective:

- OpenAI secures predictable compute costs, which reduces the risk of price volatility from spot GPU markets.

- CoreWeave gains multi-year revenue visibility, increasing its attractiveness to investors.

- The broader AI market may see consolidation, with small providers seeking partnerships to survive alongside hyperscale operations.

The deal also indirectly pressures competitors like Google and Microsoft to expand AI-specific data center capacity, ensuring they do not fall behind in model training speed or product rollout.

Long-Term Impact on AI Model Development

The expanded CoreWeave contract is more than just a compute deal; it affects the speed, scale, and quality of AI models:

- Larger Models – The Stargate expansion supports models exceeding 1 trillion parameters.

- Faster Training Cycles – Reserved GPUs reduce wait times and improve iteration speed.

- Enhanced Reliability – Distributed infrastructure ensures higher uptime for enterprise services.

- Multimodal Integration – More capacity allows OpenAI to integrate text, image, and audio processing at scale.

Enterprises leveraging OpenAI models can expect lower latency, higher throughput, and faster access to new capabilities.

Sustainability and Policy Considerations

As compute scale grows, so do environmental and regulatory concerns:

- Energy usage – AI data centers consume significant electricity. OpenAI and CoreWeave may invest in renewable energy to offset emissions.

- Data security and compliance – With large-scale AI compute, ensuring compliance with GDPR, CCPA, and industry-specific regulations is crucial.

- Antitrust scrutiny – Multi-billion-dollar exclusive agreements may attract attention from regulators concerned about market concentration.

Forward-looking AI firms will need to balance infrastructure scale with sustainability and compliance to ensure responsible growth.

What’s Next: Future Outlook

The CoreWeave OpenAI deal signals:

- A new era of specialized AI cloud partnerships – Companies will continue to seek exclusive, high-capacity GPU agreements.

- Acceleration of AI model development – Predictable infrastructure allows faster experimentation and deployment of advanced models.

- Industry benchmarks – Other AI startups and cloud providers will measure themselves against this level of commitment and scale.

- Global competitiveness – With more AI models deployed worldwide, infrastructure becomes a strategic differentiator on a global stage.

OpenAI’s Stargate program, backed by CoreWeave, is now positioned to lead in compute scale, model performance, and enterprise adoption, shaping the trajectory of AI for years to come.

Conclusion

The CoreWeave OpenAI deal is a landmark in AI infrastructure: a $6.5 billion investment in dedicated GPU capacity that not only accelerates OpenAI’s Stargate program but also establishes new industry norms for scale, reliability, and strategic partnerships.

For OpenAI, the deal ensures uninterrupted compute for developing next-generation models. For CoreWeave, it guarantees revenue and cements its position as a leading AI cloud provider. For the AI ecosystem, it underscores the critical role of compute partnerships in shaping model innovation and enterprise adoption.

As AI continues to evolve, such multi-billion-dollar infrastructure deals will define who leads in performance, capability, and global AI deployment.