A new analysis has put hard numbers on a question vexing policymakers and power planners: how much electricity will frontier AI training actually use in the years ahead? According to reporting by Axios, U.S. power demand attributed to AI is projected to rise tenfold — from roughly 5 GW today to 50 GW by 2030. In extreme scenarios, a single high-end training run in 2030 could draw 4–16 GW, with more likely baselines around 1–2 GW by 2028. One eye-popping stat: at the high end, a single training project could consume nearly 1% of total U.S. power capacity.

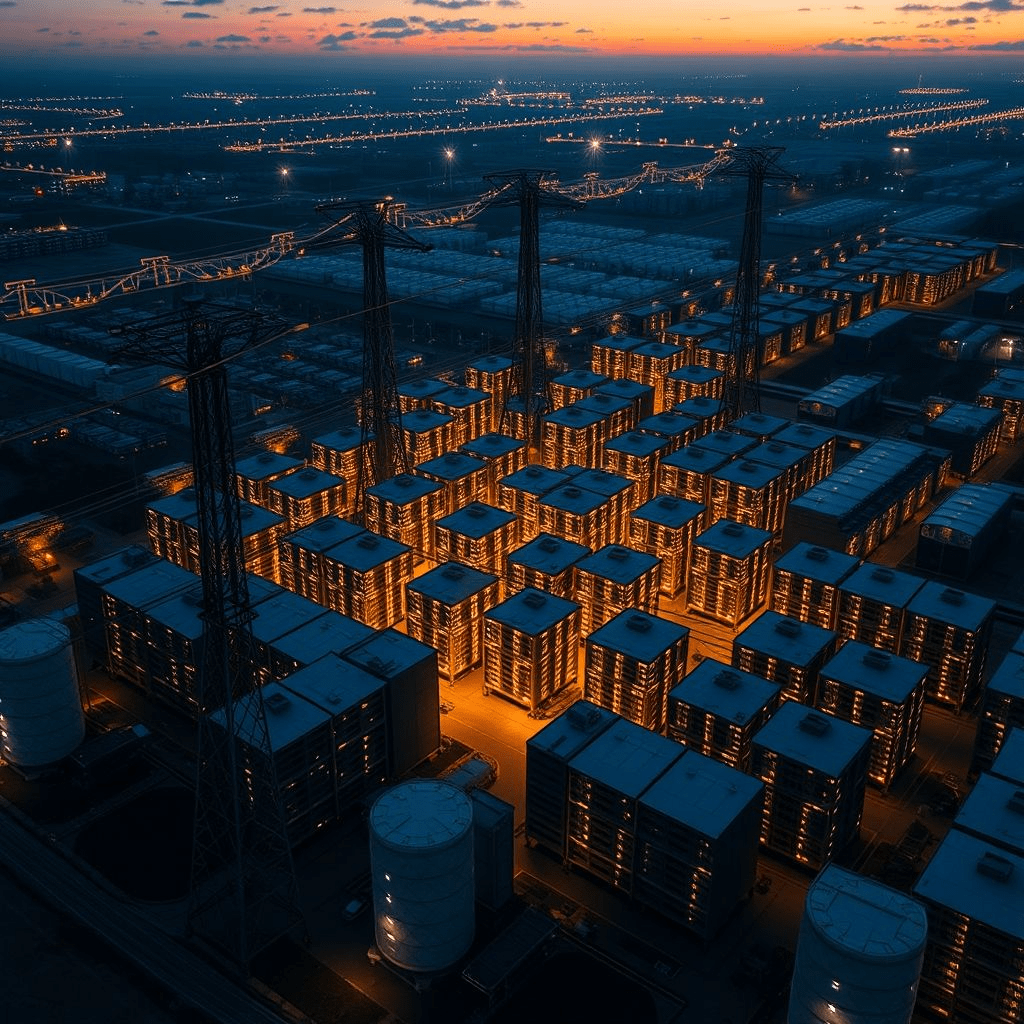

These figures, while modeled and uncertain, sharpen a growing consensus that AI training power demand is not a rounding error for the grid—it’s a system-level planning imperative. The Axios piece frames the risk in practical terms: clusters of hyperscale data centers can create localized load spikes, forcing grid operators to accelerate transmission upgrades, interconnection queues, and generation planning.

Why this is different from previous forecasts

Prior estimates often blended training and inference or looked only at aggregate data-center growth. This report, as Axios emphasizes, zooms specifically into training loads—the multi-week, resource-intensive runs behind cutting-edge models. That lens yields larger peaks and more volatile demand than steady inference traffic. It also aligns with recent analysis from think tanks warning that AI demand will continue to climb even as chips get more efficient, because model sizes, datasets, and training cadence keep expanding.

What drives the spikes

Three dynamics fuel the projected surge in AI training power demand:

- Model scale and cadence: As competitive pressure mounts, the leading labs (and their enterprise customers) iterate more often, training larger or more specialized models several times per year.

- Concentration of compute: Firms are building dense compute campuses tied to specific substations. When a training run starts, local load jumps dramatically.

- Reliability constraints: Training requires stable, high-quality power to keep thousands of accelerators in lockstep; that pushes operators to secure excess capacity and redundant feeds.

The Axios report underscores that power access is now a gating factor for AI leadership—echoing what infrastructure providers have said on earnings calls and in public filings.

The broader context: energy markets and policy

The findings arrive amid intense debate over balancing AI growth with grid modernization. Analysts at Goldman Sachs project global data-center electricity demand could climb as much as 165% by 2030 relative to 2023. While that’s a broader data-center view, it helps explain why utilities are scrambling to upgrade transmission and why developers are signing long-term power purchase agreements with renewables and, increasingly, firm, low-carbon sources.

A separate Brookings commentary this week argues that AI’s power appetite will keep rising, especially after large model breakthroughs jolted demand in early 2025, and urges planning clarity and market signals to steer capacity additions. Together, they strengthen the call for coordinated energy-policy responses—from siting to financing to environmental review—so capacity can be built near where AI clusters will live.

Industry reaction: pragmatism over hype

Inside the industry, the conversation has shifted from whether AI will strain the grid to how to procure and time power, how to colocate with renewables plus storage, and when to deploy on-site generation. Large cloud and AI firms are exploring everything from advanced geothermal and small modular reactors to behind-the-meter gas with carbon capture, though timelines and economics vary widely. Developers are also rearranging training schedules to align with off-peak pricing or high renewable output windows, a practice sometimes called temporal load shifting.

What it means for communities

Communities hosting hyperscale campuses face a paradox. New data centers can bring investment and tax base growth, but sudden load additions can crowd out existing industrial customers or slow new connections. The report’s 1–2 GW by 2028 training figure clarifies the scale: that’s akin to the demand of a large industrial complex or a mid-sized city. Local authorities will weigh rates, reliability, and environmental impacts against economic benefits when evaluating permits and interconnection queues.

Outlook: efficiency vs. expansion

Will better chips blunt the surge? Efficiency gains (higher TOPS/W, improved interconnects, sparsity, mixed-precision training) matter, as do model-training innovations (like transfer learning, curriculum strategies, and synthetic data). But history suggests performance gains are often reinvested into even larger models or faster release cycles—so absolute consumption can still rise. That’s why planners now treat AI training power demand as a structural driver of grid growth, not a transient spike.

The likely path forward is a both-and: relentless efficiency work and rapid capacity additions, with closer ties between AI developers, utilities, and regulators to mitigate localized stress. Watch for more co-development deals where AI firms fund substation upgrades or commit to long-dated power offtake to unlock new generation.

In short, the new figures make it impossible to ignore: frontier training is becoming an energy-class workload, and planning for it must happen now, not in 2029.