Introduction

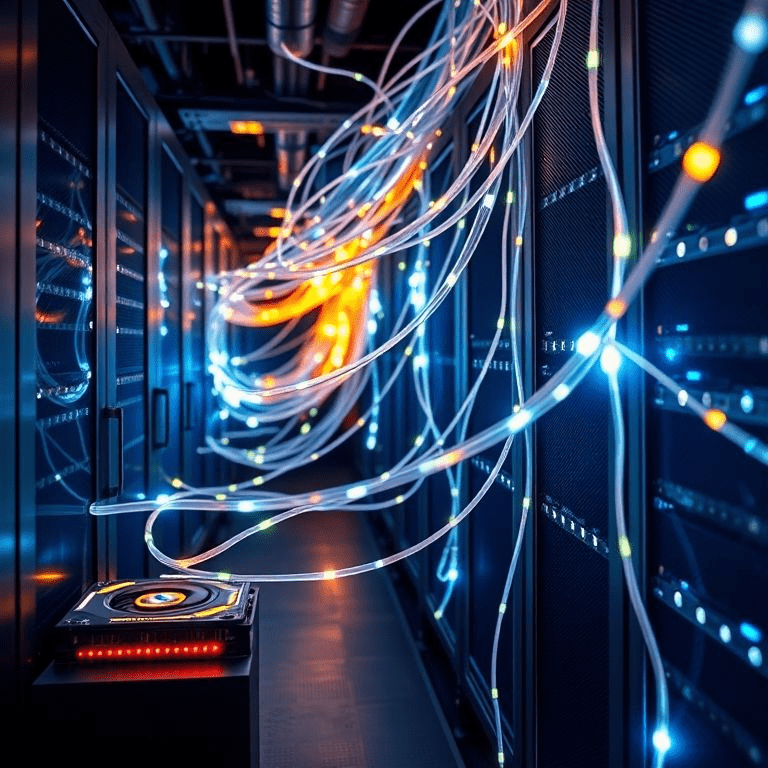

The rapid evolution of artificial intelligence (AI) has placed unprecedented strain on the infrastructure that powers it. From large language models with hundreds of billions of parameters to advanced computer vision systems running across multiple data centers, the ability to process and move information between chips is critical. While GPU computing power often dominates headlines, the less glamorous but equally crucial bottleneck lies in networking technologies.

In September 2025, Nvidia, the undisputed leader in AI GPUs, made a decisive move: it spent more than $900 million to acquire Enfabrica’s CEO, Rochan Sankar, along with other top personnel, and to license Enfabrica’s proprietary AI networking technology. This Enfabrica CEO acquisition is more than a simple hiring or licensing deal—it’s a strategic reshaping of Nvidia’s future in large-scale AI infrastructure.

This article explores the details of the deal, the importance of networking in AI, the technology Enfabrica brings to the table, reactions from industry insiders, challenges Nvidia may face in integration, and the broader implications for the AI hardware ecosystem.

Background: Why Networking is the Next AI Bottleneck

When most people think of AI hardware, they think of GPUs—graphical processing units that train and run models. But as models have grown larger and more complex, the challenge has shifted. Training GPT-4 or GPT-5 scale models involves tens of thousands of GPUs working in parallel, distributed across massive data centers.

The effectiveness of these supercomputer-like systems depends not just on the power of each chip, but on how fast and efficiently they can talk to each other. Traditional networking solutions were not designed for the data demands of modern AI workloads. Latency, bandwidth constraints, packet loss, and inefficient interconnect protocols can degrade performance by 30–50% in large-scale training scenarios.

This has given rise to a new frontier in AI hardware: AI-specific networking solutions. Startups like Enfabrica have emerged to tackle these bottlenecks with custom chips and software that optimize how GPUs and CPUs share data in real-time AI workloads.

Enfabrica: A Startup Built for AI Networking

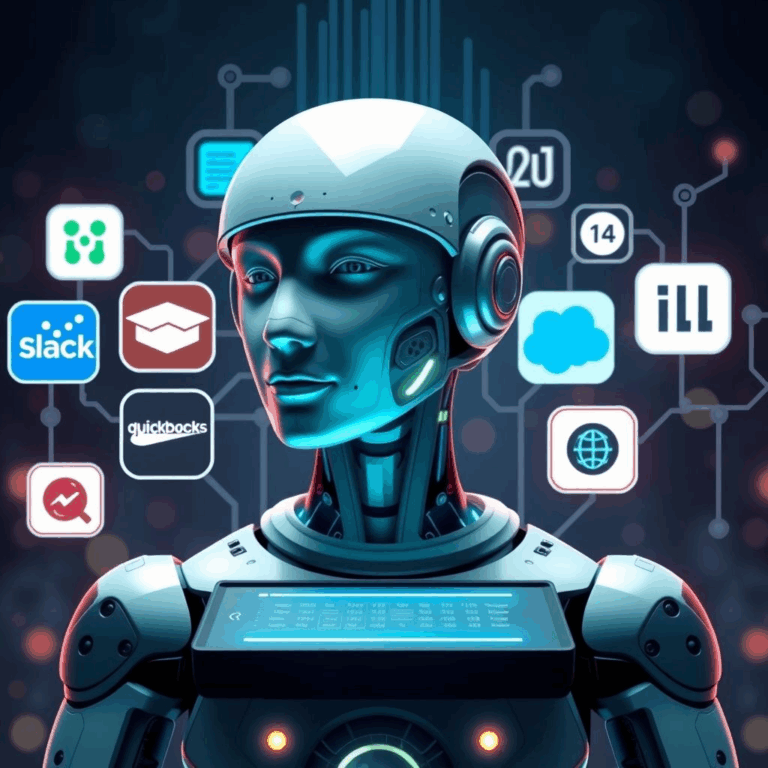

Enfabrica was founded by Rochan Sankar and Sajjad Maniar, both of whom have deep backgrounds in silicon design and networking. The company set out to build what it calls a “fabric compute system”—a hybrid of networking chips and memory controllers designed specifically for AI training and inference environments.

Some of Enfabrica’s innovations include:

- Memory-Centric Networking: Instead of treating memory and networking as separate silos, Enfabrica designed chips that integrate memory pooling directly into network fabrics. This allows GPUs to access data faster, reducing idle time.

- Low-Latency Interconnects: The startup developed protocols that minimize latency between nodes in large clusters, improving synchronization during model training.

- Scalable Infrastructure: Enfabrica’s architecture scales efficiently across thousands of nodes, which is critical for training models with trillions of parameters.

- Power Efficiency: By optimizing data movement, Enfabrica claims it can reduce overall power usage per training job, a significant advantage in an era where AI workloads consume megawatts of electricity.

These innovations made Enfabrica a rising star in the AI infrastructure ecosystem. But building chips is capital-intensive, and to reach mass adoption, Enfabrica needed either massive funding or partnership with a global player.

The Deal: Nvidia’s $900 Million Bet

According to Reuters and CNBC reports, Nvidia has agreed to pay over $900 million in a deal that covers:

- Hiring of Rochan Sankar (CEO) and senior team members

- Licensing of Enfabrica’s proprietary AI networking technology

- Integration of Enfabrica’s engineering roadmap into Nvidia’s data-center strategy

This is not a traditional “M&A” acquisition of the entire company but rather a talent and technology acquisition—a hybrid deal where Nvidia secures the brains and the IP without outright owning all of Enfabrica’s assets.

By structuring the deal this way, Nvidia avoids a lengthy regulatory approval process while still achieving its goal: folding Enfabrica’s technology into its GPU ecosystem.

Reactions: Why This Move Matters

Industry analysts have described the Enfabrica CEO acquisition as one of Nvidia’s most strategic deals in recent years.

- Patrick Moorhead, semiconductor analyst, commented that “networking is the next big battleground in AI hardware. GPUs alone can’t solve scaling challenges. Nvidia moving aggressively into networking ensures it maintains its dominance.”

- CNBC noted that this deal resembles Nvidia’s earlier approach to Mellanox Technologies, a networking company it acquired in 2020 for $6.9 billion. Mellanox technology has since become a cornerstone of Nvidia’s data-center products.

- Venture capitalists see this as validation of the networking startup space. One investor remarked that “Enfabrica may not have gone public or reached unicorn status, but its tech was good enough for Nvidia to spend nearly a billion dollars. That tells you where the industry is headed.”

Industry Impact: The Race for AI Infrastructure

The Enfabrica deal has ripple effects across the industry:

- Nvidia Strengthens Its Moat: By bringing networking in-house, Nvidia ensures that hyperscalers like Google, Microsoft, and Amazon remain reliant on its ecosystem for end-to-end AI systems.

- Competitors Feel the Heat: AMD, Intel, and startups like Cerebras or Graphcore must now either develop or acquire networking capabilities to stay competitive.

- Networking Startups Gain Attention: Investors are likely to scout for the “next Enfabrica,” potentially leading to new funding rounds for startups in memory fabrics, interconnects, or optical networking.

- Cloud Providers Push Back?: Some hyperscalers may prefer open-source or vendor-neutral networking to avoid deeper lock-in with Nvidia. This could drive parallel efforts in-house at companies like Google (TPUs) or Amazon (Inferentia/Trainium).

Challenges Ahead for Nvidia

Despite the excitement, Nvidia faces several challenges:

- Integration Risks: Bringing in a startup team and merging their roadmap into a giant like Nvidia can lead to cultural clashes, delays, or strategic misalignment.

- IP Licensing Complexities: Since Nvidia licensed the tech rather than buying Enfabrica outright, questions remain about future IP ownership, royalties, and exclusivity.

- Market Resistance: Cloud providers wary of Nvidia’s growing dominance may seek alternative vendors or accelerate internal R&D to reduce dependency.

- Execution Pressure: Nvidia must prove that Enfabrica’s technology delivers measurable performance gains in upcoming product releases.

Future Outlook: What’s Next?

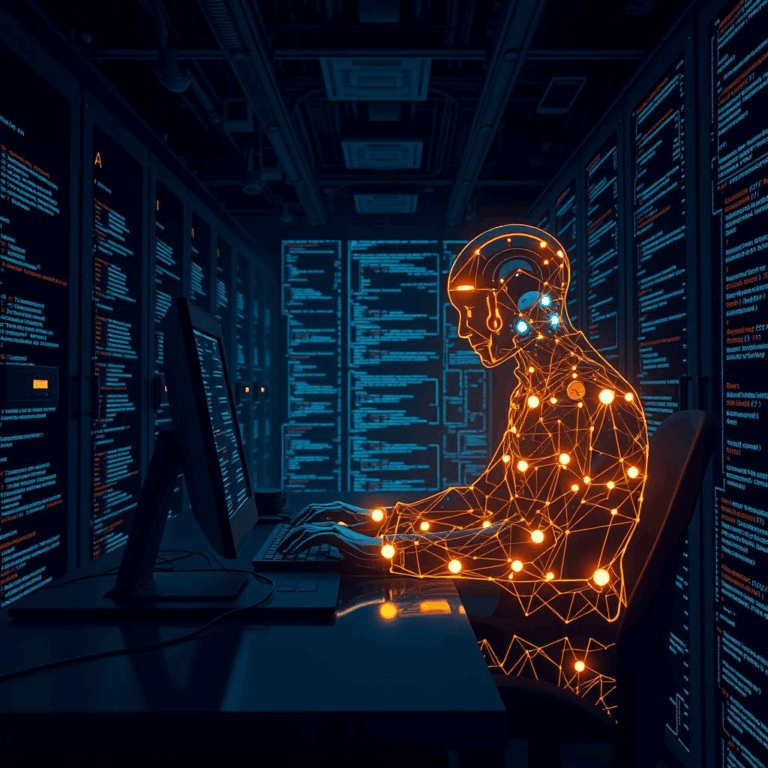

The Enfabrica CEO acquisition signals Nvidia’s recognition that AI networking is as important as GPUs themselves. Looking ahead, several developments are likely:

- New Nvidia Networking Products: Expect announcements of interconnects, switches, or memory fabrics branded under Nvidia but powered by Enfabrica’s designs.

- Improved Benchmarks: Future AI training benchmarks (such as MLPerf) could show significant performance improvements once Enfabrica’s tech is integrated.

- Industry Consolidation: More acquisitions of networking startups are likely, as competitors rush to match Nvidia’s move.

- Investor Shifts: Venture funding may increasingly flow into “AI plumbing” companies—not just GPUs, but the hardware and software that makes large-scale AI work reliably.

- Sustainability Gains: If Enfabrica’s power-efficiency claims hold true, Nvidia could market not just performance but also green AI infrastructure, aligning with ESG-focused clients.

Conclusion

Nvidia’s Enfabrica CEO acquisition is more than a $900 million deal—it’s a signal of where the future of AI hardware is headed. As models grow larger, the bottleneck is no longer just raw compute power but the ability to move and share data seamlessly across vast networks of chips.

By securing Enfabrica’s leadership and licensing its networking technology, Nvidia is preparing for a future where networking is the key differentiator in AI. For startups, investors, and competitors, the message is clear: the AI arms race is expanding into new territories, and networking may be the next trillion-dollar frontier.