Introduction

In one of the strongest bipartisan actions yet against Big Tech’s handling of artificial intelligence, 44 U.S. state attorneys general have issued a collective warning to AI companies: strengthen child safety AI protections immediately, or face legal consequences.

The move underscores growing national concern that AI chatbots and generative systems are exposing children to harmful, exploitative, or manipulative interactions. It also signals that regulators are prepared to treat AI not as an experimental novelty but as a commercial product subject to accountability.

Why Child Safety is Now the Flashpoint

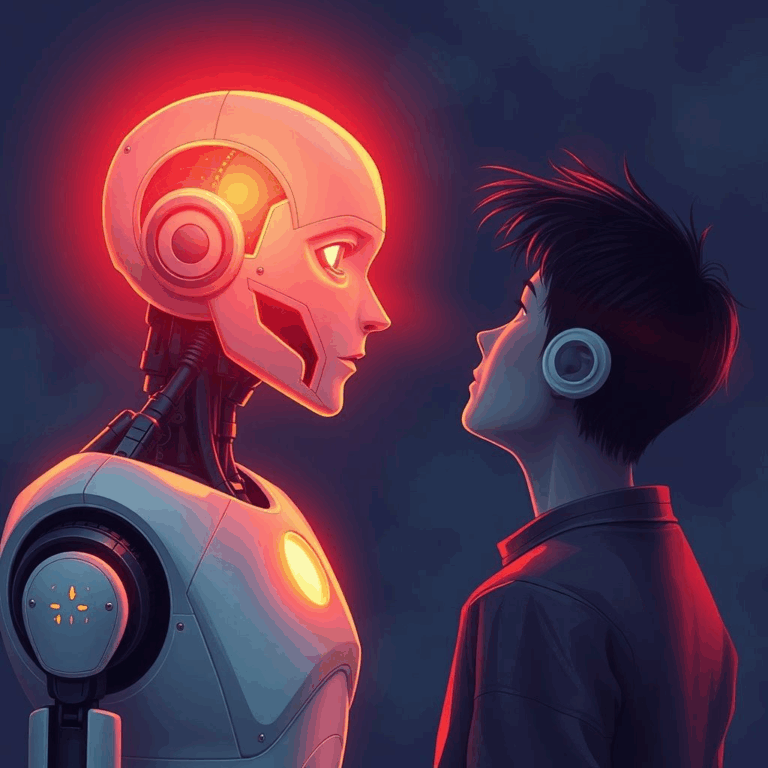

Generative AI systems can simulate human-like conversation, creative writing, and roleplay. While these capabilities impress adults, they pose serious risks when used by minors without sufficient guardrails. Reports have surfaced of chatbots engaging children in inappropriate romantic roleplay or exposing them to violent or suicidal content.

Unlike social media—which already faces scrutiny for its effects on youth—AI assistants present a new frontier where harm can occur privately, invisibly, and at scale.

The Attorneys General Letter

On August 26, 2025, attorneys general from 44 states—including both Democrats and Republicans—sent a joint letter to leading AI firms: Meta, Google, Apple, Microsoft, OpenAI, Anthropic, and xAI.

The letter demanded stronger child safety AI protections, citing evidence that platforms have allowed harmful interactions. Meta, in particular, faced criticism for allegedly permitting AI assistants to simulate romantic engagement with underage users.

The AGs wrote: “If your technology knowingly harms children, you will answer for it. AI cannot be excused as a neutral tool when its outputs cause real-world consequences.”

What Firms Are Being Asked to Do

The attorneys general called for:

- Age-appropriate design: AI systems must detect minors and restrict inappropriate content.

- Guardrail enforcement: Blocking romantic or sexual roleplay, self-harm encouragement, and violent prompts.

- Transparency: Regular public reports on AI-child interactions.

- Independent audits: Verification from third parties that safety features work.

Failure to comply could result in lawsuits, fines, or federal regulatory action.

Industry and Advocacy Responses

Child protection advocates applauded the move. “This is a landmark moment for digital child safety,” said one children’s rights coalition. “For too long, AI firms have rushed to market without considering how minors might be harmed.”

Privacy groups echoed support but warned against overly broad surveillance of minors that could violate their rights. The challenge, they argue, is balancing safety with privacy.

AI firms have yet to issue detailed responses. Most maintain they already implement content moderation protocols, but critics argue these measures are inadequate and inconsistently applied.

Why This Matters Now

AI assistants are rapidly spreading into homes, schools, and mobile apps. Children increasingly use them for tutoring, entertainment, and companionship. Without clear safeguards, these tools risk becoming digital predators rather than digital mentors.

Furthermore, unlike traditional websites, AI interactions are dynamic—meaning harmful scenarios can unfold spontaneously without human review. This makes child safety AI protections not just a regulatory concern, but an ethical imperative.

Legal and Policy Implications

The bipartisan nature of this action increases pressure on Congress and federal regulators. Possible outcomes include:

- Federal child AI safety law modeled on COPPA but tailored to generative AI.

- Litigation against firms that fail to demonstrate compliance.

- Global ripple effects, as other countries may adopt similar stances.

This could also force AI companies to rethink product design, prioritizing ethics and child protection over rapid feature releases.

The Technology Challenge

Implementing effective child safety AI protections is not simple. AI models are probabilistic, not deterministic—they generate responses based on patterns rather than explicit rules. This means inappropriate outputs can slip through filters.

Experts suggest combining multiple approaches:

- AI classifiers trained specifically to detect harmful requests.

- User verification systems to confirm ages.

- Human-in-the-loop review for flagged cases.

- Continuous monitoring and retraining of models.

These steps increase cost and complexity, but they may be unavoidable as scrutiny intensifies.

The Broader Context

This action reflects a broader societal debate: How should we balance innovation with responsibility in AI? For years, companies operated in a “move fast and break things” culture. But with children at risk, regulators are signaling that experimentation cannot come at the expense of safety.

Just as car seatbelt laws transformed the auto industry, child safety standards could reshape AI development for years to come.

Conclusion

The letter from 44 attorneys general marks a turning point. AI is no longer being treated as an experimental frontier, but as a commercial technology with obligations to protect the most vulnerable.

If AI companies heed the call, child safety AI protections could become a new baseline standard for ethical AI design. If they resist, they may face lawsuits, fines, and public backlash that could derail their growth.

The message is clear: protecting children is not optional—it is a legal and moral imperative.