Introduction

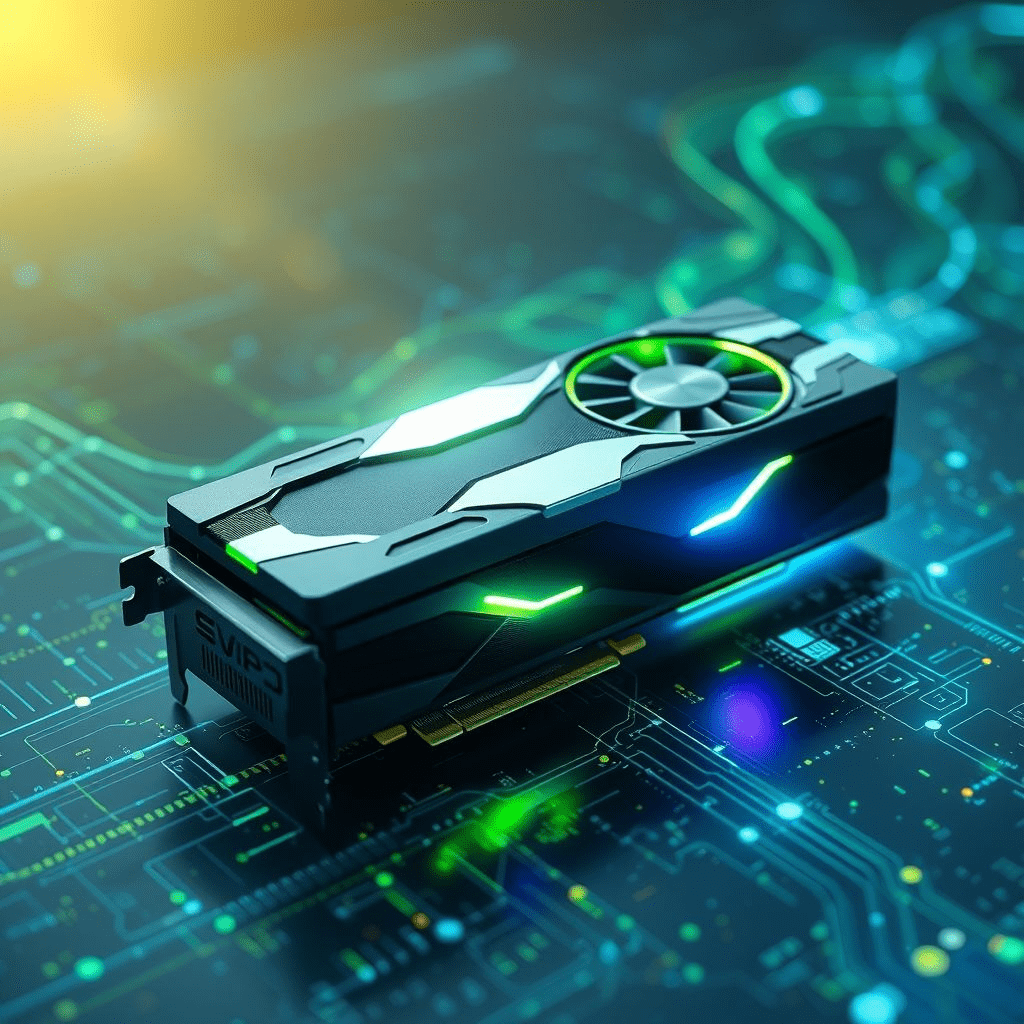

Nvidia, the global leader in AI hardware, announced an open beta for its AI-optimized GPUs on June 18, 2025. These GPUs promise up to 3x faster processing for large language models (LLMs) and generative tools.

The Technology

These GPUs feature dynamic task prioritization, which reduces bottlenecks in AI inference, allowing smoother workflows for high-demand operations like chatbots, image generation, and advanced analytics.

Why It Matters

AI developers often face lag times due to standard GPU limitations. Nvidia’s AI-optimized GPUs could redefine productivity by cutting training times and costs.

Key Features

- Task Prioritization: Allocates compute resources based on live demands.

- Thermal Management: Enhanced cooling enables 24/7 uptime.

- Sustainable Design: AI models consume 35% less power.

Expert Commentary

“This innovation could shift GPU demand among cloud services and SaaS enterprises,” noted analyst Priya Shah from GenAI Analytics.

Market Trends

With AI compute doubling every 6 months, these GPUs position Nvidia to outpace competitors like AMD. Early tests show LLM inference latency dropped by 50%.

Implications

Companies could soon host advanced AI applications on smaller data centers, lowering infrastructure costs. Nvidia is already partnering with OpenAI and AWS for beta deployments.

Looking Forward

Beta access opens on July 1, targeting developers focused on scaling their models globally. Mass availability is expected by Q4.